4 Things Industry 4.0 10/20/2025

Presented by

Happy October 20th, Industry 4.0!

If technology was rocket fuel, manufacturing would still be checking the launchpad for cracks.

OpenAI just dropped billions on custom chips with Broadcom, Nvidia's consortium bought the world's biggest data center network, and ByteDance released a robot that actually understands plain English. Meanwhile, manufacturing is still waiting for its ChatGPT moment.

Turns out, the bottleneck isn't the AI—it's the boring infrastructure no one talks about. Geometry kernels. Custom silicon. Data centers that can actually handle industrial workloads. This week, we're looking at why the flashy stuff doesn't matter until the foundation is built.

Let's talk about the unglamorous tech stack that's about to unlock AI in manufacturing.

Why Manufacturing Still Hasn't Had Its ChatGPT Moment?

The question isn't if AI will transform manufacturing—it's why hasn't it already?

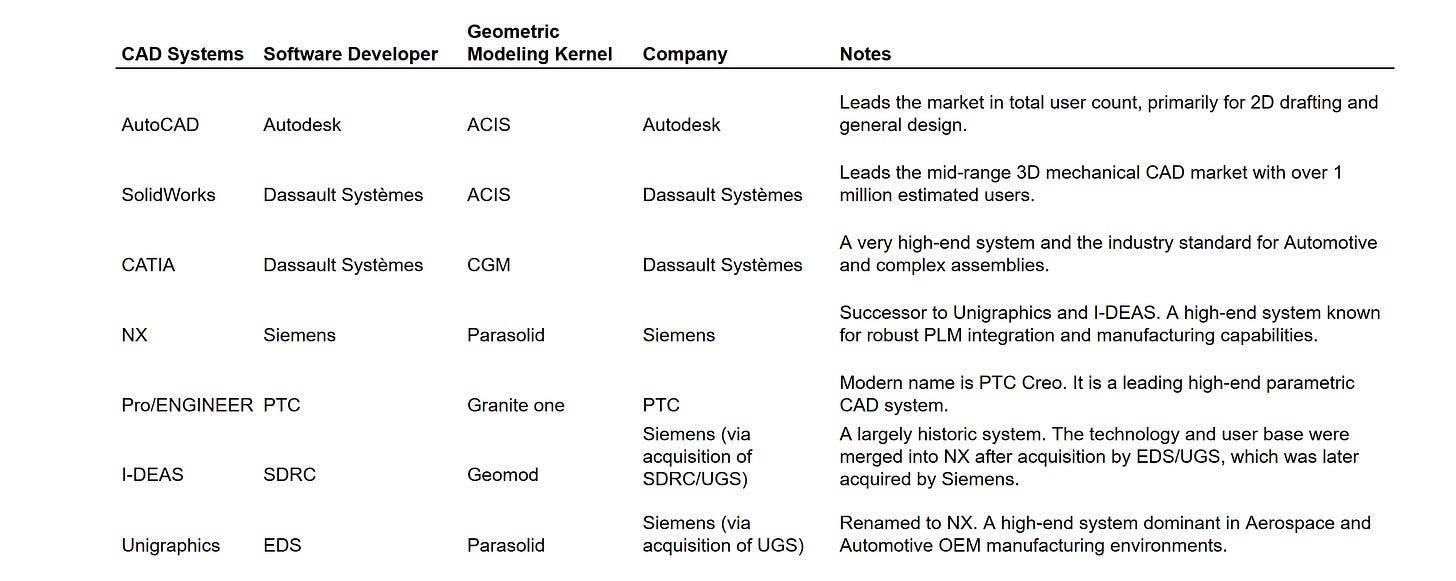

A deep dive from The Shear Force reveals the hidden bottleneck: geometry kernels. These are the mathematical engines inside CAD software that define how every part, assembly, and product gets designed. And here's the problem: they're controlled by a handful of companies whose entire business model depends on keeping them closed and proprietary.

Whoever builds the first truly capable foundational AI model for CAD will control a critical layer of industry infrastructure—think of it as the operating system for making physical things. But the current players (Siemens, Dassault, PTC) have zero incentive to open up their kernels, even if it would accelerate innovation across the entire manufacturing sector.

The takeaway? If the US wants to maintain industrial competitiveness, it needs to treat geometry kernels as strategic infrastructure, not just commercial software. The market has had decades to produce an open alternative and hasn't—which means coordinated public investment might be the only path forward.

This is the unsexy infrastructure layer that determines whether AI can truly transform how we design and build things.

Robots That Actually Understand You

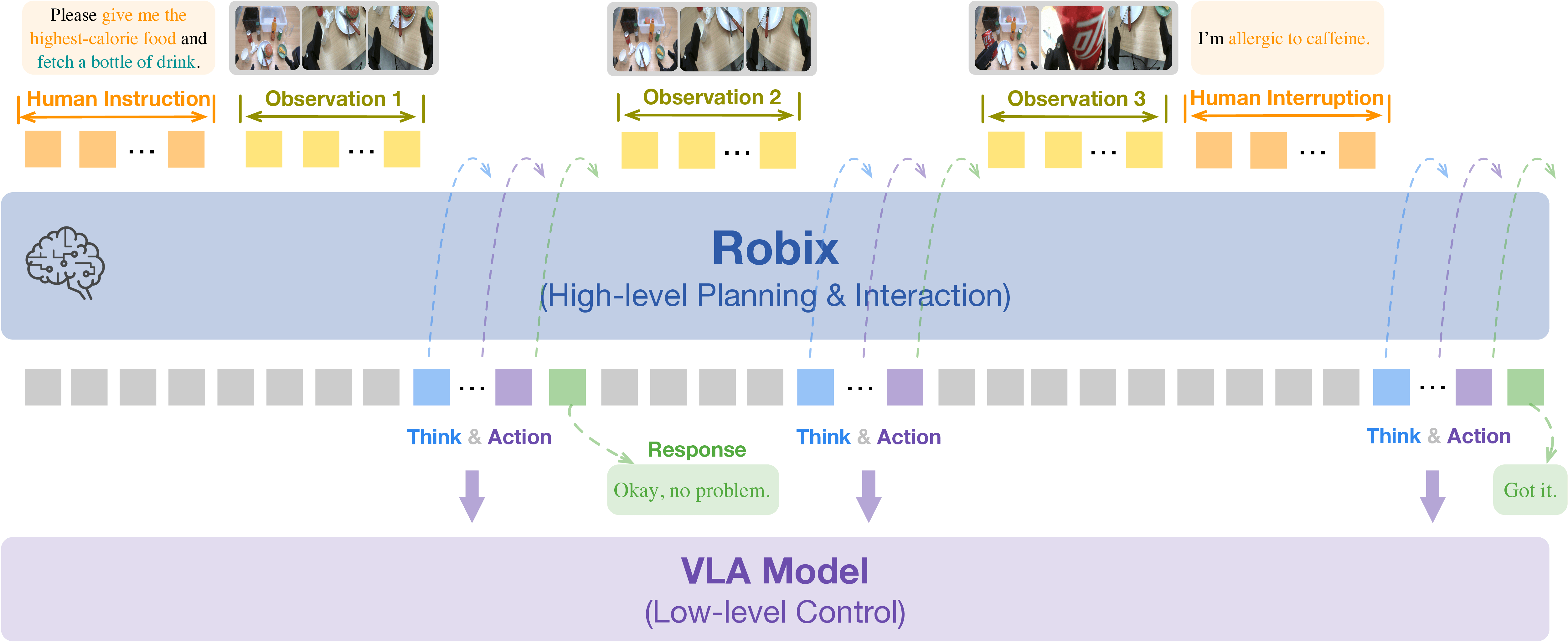

Forget the robotic arms that repeat the same three movements. ByteDance just unveiled Robix, a unified vision-language model that's rewriting what industrial robots can do.

Here's what makes it different: Robix combines reasoning, task planning, and natural language interaction in one framework. That means you can tell a robot "sort these parts by quality and stack them on the blue pallet" and it'll figure out the how—handling complex, long-horizon tasks without needing to be explicitly programmed for every scenario.

The real breakthrough? These robots can communicate back. They don't just execute—they ask clarifying questions, report issues, and provide verbal updates on what they're doing. It's the kind of natural interaction that finally makes collaborative robotics feel less like programming and more like managing a very capable (and very literal) teammate.

Why it matters: As manufacturing systems get more flexible and orders more customized, robots need to adapt on the fly. Robix-style models could be the bridge between "lights-out automation" and "agile manufacturing"—systems that can handle variety without requiring an army of engineers to reprogram them every time something changes.

The $40 Billion Bet on AI Infrastructure

When Nvidia, Microsoft, BlackRock, and xAI team up to drop $40 billion on data centers, you pay attention.

The consortium is acquiring Aligned Data Centers in what's now the largest global data center deal on record. This isn't just about more servers—it's about building the infrastructure backbone that AI-powered manufacturing, logistics, and operations will run on.

Here's the strategic play: As AI models get integrated into everything from predictive maintenance systems to real-time production optimization, companies need massive, reliable computing infrastructure close to their operations. This deal signals that the smart money believes AI won't just be a cloud service—it'll be deeply embedded in how enterprises operate.

The industrial angle: Edge computing and hybrid cloud architectures are becoming critical for manufacturers who need low-latency AI inference on the factory floor. This kind of infrastructure investment makes those deployments feasible at scale.

The deal is expected to close late next year, pending regulatory approvals. But the message is already clear: the infrastructure buildout for industrial AI is happening now.

Sponsor Message

MaestroHub is a next-generation industrial data platform enabling manufacturers to unify, contextualize, and orchestrate their operations data. Founded in Turkey with a growing global presence, MaestroHub bridges OT and IT environments through a Unified Namespace (UNS)-based approach, empowering companies to reduce costs, optimize energy use, and improve yield. MaestroHub provides a scalable solution for both mid-sized facilities and large enterprises. Learn More Here: https://maestrohub.com

OpenAI Goes Silicon: The 10-Gigawatt Chip Partnershipt

OpenAI just made its biggest infrastructure play yet: a multi-year partnership with Broadcom to co-develop and deploy 10 gigawatts of custom AI accelerators by 2029.

Here's the breakdown:

- Co-Development: OpenAI leads chip and system design, embedding insights from its frontier AI models

- Manufacturing: Broadcom handles production and deployment, with networking integration using their Ethernet, PCIe, and optical solutions

- Timeline: Initial deployments in H2 2026, full rollout by end of 2029

Why does this matter for manufacturing? OpenAI is joining Amazon and Google in the race for custom silicon, aiming for greater control over cost, performance, and supply chain stability. For industrial AI applications—think computer vision systems on production lines, predictive maintenance algorithms, or real-time quality control—this kind of specialized hardware could dramatically lower the cost and improve the performance of AI inference at scale.

The open question: Can OpenAI's custom chips rival Nvidia's performance-per-watt efficiency while scaling to 10GW? Broadcom's stock jumped 10% on the news, so the market thinks they've got a shot.

Bottom line: As AI moves from the cloud to the edge—including factory floors—custom silicon designed specifically for AI workloads could become the norm, not the exception.

👉 Learn more about the partnership

Learning Lens

Advanced MCP + Agent to Agent: The Workshop You've Been Asking For

If you've been building with MCP and wondering how to take it to the next level—multi-server architectures, agent orchestration, and distributed intelligence—this one's for you.

On December 16-17, Walker Reynolds is running a live, two-day workshop that goes deep on Advanced MCP and Agent2Agent (A2A) protocols. This isn't theory—it's hands-on implementation of the patterns that enable collaborative AI systems in manufacturing.

Here's what you'll build:

- Multi-server MCP architectures with server registration, authentication, and message routing

- Agent2Agent communication protocols where specialized AI agents collaborate to solve complex industrial problems

- Production-ready patterns for orchestrating distributed intelligence across factory systems

The Format:

- Day 1: Advanced MCP multi-server architectures (December 16, 9am-1pm CDT)

- Day 2: Agent2Agent collaborative intelligence (December 17, 9am-1pm CDT)

- Live follow-along coding + full recording access for all registrants

Early Bird Pricing: $375 through November 14 (regular $750)

Whether you're architecting UNS environments, building agentic AI systems, or just tired of single-server MCP limitations, this workshop gives you the architecture patterns and implementation playbook to scale.

Why it matters: MCP is rapidly becoming the backbone for connecting AI agents to industrial data. Understanding how to orchestrate multiple servers and enable agent-to-agent collaboration isn't just a nice-to-have—it's the foundation for autonomous factory operations.

Byte-Sized Brilliance

The $100 Million Line of Code

In 1963, Ivan Sutherland created Sketchpad on MIT's TX-2 computer—the first CAD program that let you draw on a screen with a light pen. Revolutionary? Absolutely. But here's the kicker: the geometry kernel behind it was so primitive, it could barely handle circles and lines.

Fast forward to today, and those geometry kernels are worth billions—literally controlling how every physical product gets designed, from toasters to turbines. The modern geometry engines powering SolidWorks, CATIA, and NX are direct descendants of that 1963 breakthrough, refined over 60+ years into some of the most valuable (and closely guarded) code on the planet.

Sutherland's thesis advisor called Sketchpad "the most important computer program ever written." He wasn't wrong—it just took 60 years and a few trillion dollars of manufacturing to prove it.

Sometimes the foundation you build in a university lab becomes the bottleneck for an entire industry.

|

|

|

|

|

|

Responses