4 Things Industry 4.0 12/2/2025

Presented by

Happy December 2nd, Industry 4.0!

Welcome back from the long weekend — hopefully your leftovers lasted longer than your phone battery on the drive home. While most of us were trying to remember what day it was, the tech and industrial world didn’t take a holiday pause. Edge-to-cloud partnerships are accelerating, AI is getting more specialized for the factory floor, open-source challengers are pushing Big Tech on performance and price, and hyperscale infrastructure just hit numbers nobody thought were possible.

As we head into the final stretch of 2025, one thing’s clear: the pace of innovation doesn’t care what’s on the calendar. The companies winning right now are the ones using AI, data, and automation not as buzzwords — but as operating principles.

Grab a coffee, shake off the tryptophan, and let’s dive into the tech shaping the final month of the year.

Litmus & Panasonic Team Up to Deliver Edge-to-Cloud IIoT for Japanese Factories

Litmus Automation Japan and Panasonic Solution Technologies have announced a strategic alliance aimed at bringing scalable, edge-to-cloud Industrial IoT solutions to manufacturers across Japan.

What’s happening:

- Their joint offering pairs Litmus’s flagship platform Litmus Edge—designed to collect and normalize real-time data from industrial machines at the edge—with Panasonic’s integration, cloud, and system-integration capabilities.

- The stack supports 250+ industrial protocols (OPC UA, Modbus, Ethernet/IP, etc.), so factories can connect legacy and modern equipment without expensive retrofits.

- Once on-site, data can seamlessly flow from machines to cloud platforms, MES, or enterprise systems—enabling real-time monitoring, predictive maintenance, AI/ML-based anomaly detection, KPIs, dashboards, and more.

Why it matters for manufacturing & 4.0 leaders:

- Many Japanese—and global—factories run on brownfield equipment. This alliance offers a plug-and-play bridge between old machines and modern AI/cloud workflows, without ripping out gear or reengineering lines.

- Edge-to-cloud integration at this scale means you can start small (single line), then scale to multi-site deployments with enterprise-level security and bilingual support.

- It reduces friction for adoption of AI/ML: you get clean data flows and real-time visibility without overhauling infrastructure, which lowers the barrier for predictive maintenance, quality monitoring, and operations optimization.

Bottom line: This partnership doesn’t just promise smart factories—it hopes to deliver a practical migration path for plants stuck on legacy equipment. For anyone building or consulting on Industry 4.0 rollouts, Litmus + Panasonic shows how edge-to-cloud IoT can be industrialized at scale.

IFS and Anthropic Launch “Resolve” — Industrial-Grade AI for Factories, Utilities & Beyond

IFS Nexus Black and Anthropic announced a new partnership to bring advanced AI tools into industrial environments under a product called Resolve. Resolve combines Anthropic’s AI models with IFS’s deep experience in heavy-asset industries (manufacturing, energy, utilities, aerospace, and more) to deliver real-time, safety-aligned AI that can interpret sensor data, video/inspection images, schematics, and logs — then surface actionable alerts and predictions for frontline technicians.

Why this matters: Instead of generic AI assistants built for office tasks, Resolve is tailored for “where the work gets done” — power plants, factory floors, telecom infrastructure, construction sites — with emphasis on reliability, safety, and asset-heavy workflows. Real-world use (e.g., at a distillery) shows the platform helping cut reactive maintenance, reduce downtime, and surface subtle failure signals that traditional monitoring often misses.

Bottom line: Resolve makes industrial A.I. practical — equipping technicians with tools that augment human judgment, catch issues early, and keep mission-critical systems running smoothly.

DeepSeek AI Gains Traction — Open-Source Model Challenges Big Tech’s Premium Pricing

DeepSeek AI, a startup based in Hangzhou, has released its latest reasoning models (e.g. DeepSeek-V3.2 / V3.2-Speciale) that reportedly rival top-tier models like GPT‑5 and Gemini 3 Pro on complex reasoning and math benchmarks — but at a fraction of the cost.

By using a Mixture-of-Experts (MoE) architecture and efficient training pipelines, DeepSeek claims to drastically cut compute requirements — in some cases reportedly under $6 million for full model training — compared to the hundreds of millions more typical for big-name LLMs.

For organizations building industrial AI, this kind of efficiency unlocks practical use-cases: lower cloud/GPU costs, easier deployment, and models that scale more affordably across edge devices or internal tools.

The bottom line: DeepSeek proves that advanced AI reasoning doesn’t have to come with enterprise-level price tags — and that opens the door for wider adoption of AI-driven automation and analytics in industrial and manufacturing settings.

👉 Read more about DeepSeek’s latest release

A Word from This Week's Sponsor

Speaking of the right architecture... (see what we did there?)

If you just read the previous article and thought "yep, that's us—we've got data chaos and systems that don't talk to each other"—meet Fuuz.

Fuuz is the first AI-driven Industrial Intelligence Platform built specifically to solve the data nightmare that's holding manufacturers back. It unifies your siloed systems—MES, WMS, CMMS, whatever alphabet soup you're running—into one connected environment where everything actually talks to each other.

Why it matters:

- Multi-tenant mesh architecture: Deploy on cloud, edge, or hybrid without rebuilding everything

- Low-code/no-code + pro-code: Your business users and developers can both build fast

- Hundreds of pre-built integrations: No more custom integration hell

- Unified data layer: Real-time analytics and AI-powered operations actually work when your data isn't scattered across 47 systems

Whether you're extending existing infrastructure or building new capabilities, Fuuz gives you the foundation to scale industrial applications without the usual pain.

Works for both discrete and process manufacturing. No complete tech stack rebuild required.

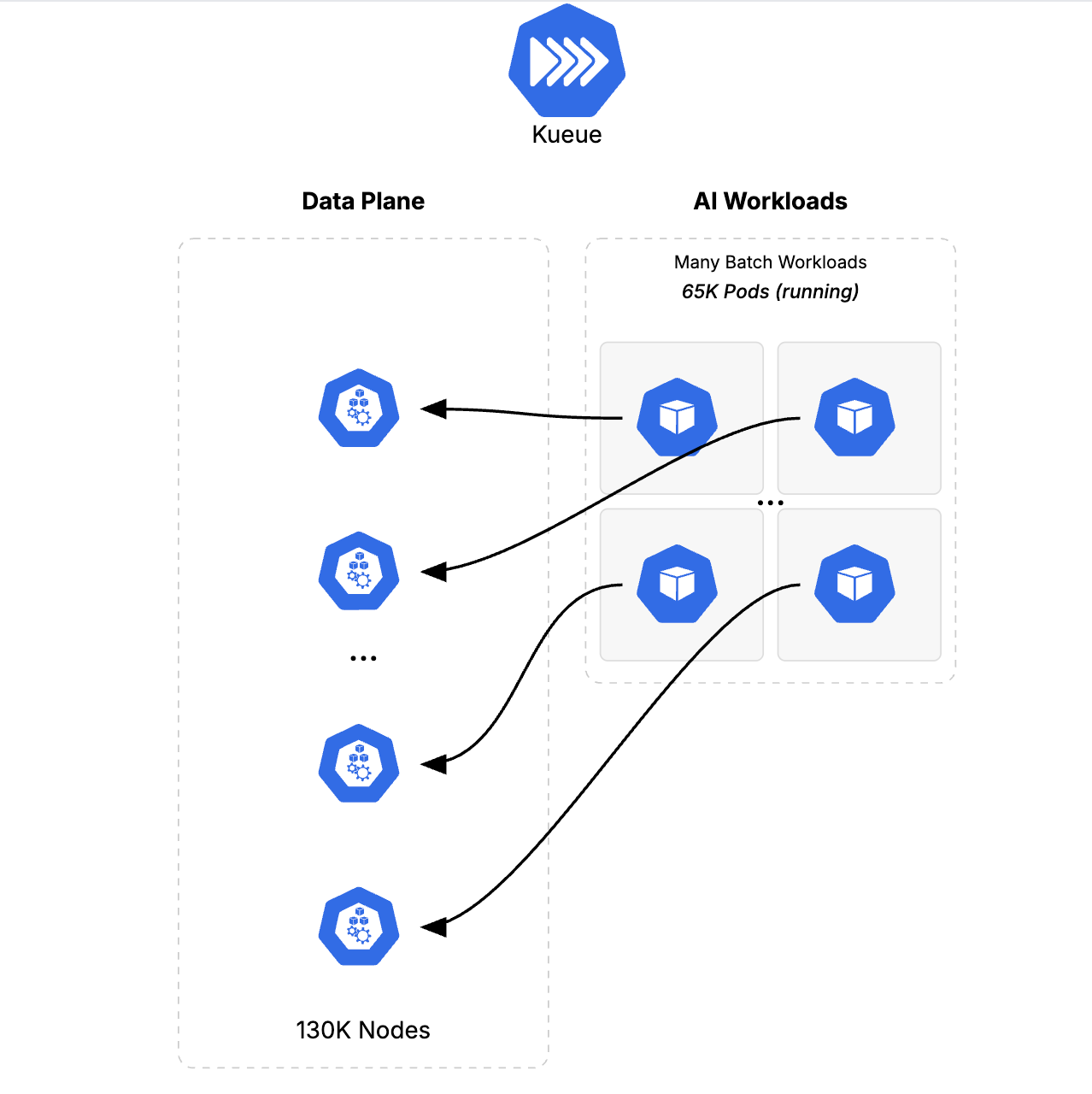

Google Kubernetes Engine (GKE) Achieves Hyperscale: 130,000-Node Cluster Now a Reality

Google Cloud just announced that it successfully ran a 130,000-node cluster using GKE — more than double the previous production-grade limit of 65,000 nodes.

What changed:

- The team had to overhaul nearly every component of the Kubernetes stack — from control plane scaling and scheduling to network topology, storage backend, and pod orchestration throughput.

- During testing, the cluster sustained ~1,000 pod creations per second and managed storage containing over a million distributed objects, proving it’s not just a scale headline but a fully functional environment.

Why it matters for Industry 4.0 & operations:

- As AI, digital twins, and real-time IIoT workloads balloon, infrastructure needs to scale — not just vertically (more powerful machines), but horizontally (more nodes). GKE’s 130K-node milestone means container orchestration can now match industrial-scale compute demands.

- This kind of scale enables massive distributed compute tasks — e.g. training large AI/ML models, running large-scale simulations, or handling data from thousands of connected devices — without sharding across multiple clusters or cloud providers.

- For manufacturing or enterprise environments, hitting this scale via a managed service lowers operational overhead: you get extreme compute capacity with the robustness and tooling of Kubernetes, without custom orchestration layers.

Bottom line: If you’ve dreamed of running AI-intensive workloads, simulations, or large-scale data processing on containers — but worried Kubernetes couldn’t handle the load — GKE’s new 130,000-node feat suggests the ceiling just got a lot higher.

👉 Read the full deep-dive from Google Cloud: https://cloud.google.com/blog/products/containers-kubernetes/how-we-built-a-130000-node-gke-cluster

Learning Lens

Advanced MCP + Agent to Agent: The Workshop You've Been Asking For

If you've been building with MCP and wondering how to take it to the next level—multi-server architectures, agent orchestration, and distributed intelligence—this one's for you.

On December 16-17, Walker Reynolds is running a live, two-day workshop that goes deep on Advanced MCP and Agent2Agent (A2A) protocols. This isn't theory—it's hands-on implementation of the patterns that enable collaborative AI systems in manufacturing.

Here's what you'll build:

- Multi-server MCP architectures with server registration, authentication, and message routing

- Agent2Agent communication protocols where specialized AI agents collaborate to solve complex industrial problems

- Production-ready patterns for orchestrating distributed intelligence across factory systems

The Format:

- Day 1: Advanced MCP multi-server architectures (December 16, 9am-1pm CDT)

- Day 2: Agent2Agent collaborative intelligence (December 17, 9am-1pm CDT)

- Live follow-along coding + full recording access for all registrants

Early Bird Pricing: $375 through November 14 (regular $750)

Whether you're architecting UNS environments, building agentic AI systems, or just tired of single-server MCP limitations, this workshop gives you the architecture patterns and implementation playbook to scale.

Why it matters: MCP is rapidly becoming the backbone for connecting AI agents to industrial data. Understanding how to orchestrate multiple servers and enable agent-to-agent collaboration isn't just a nice-to-have—it's the foundation for autonomous factory operations.

Byte-Sized Brilliance

The First “Cyber Monday” Was Basically Just People Shopping at Work

When Cyber Monday debuted in 2005, retailers didn’t expect a shopping holiday — they expected people returning to the office after Thanksgiving… and finally getting access to faster internet than they had at home. Most households were still on slow DSL or dial-up, so millions of shoppers waited until Monday morning to buy holiday tech deals from their work computers.

So yes — the tradition of “getting back to work” by immediately not working was baked into tech culture from the start.

|

|

|

|

|

|

Responses