4 Things Industry 4.0 11/10/2025

Presented by

Happy November 10th!

It's the middle of November, which means three things:

- Your inbox is full of "Black Friday Early Access!!!" emails (even though Black Friday is still almost three weeks away)

- Someone in your office has already started playing Christmas music

- Every executive in manufacturing is asking, "How are we going to close Q4?"

Speaking of Q4 closing panic: while retailers are scrambling to predict consumer demand and optimize their supply chains for the holiday rush, the manufacturing world just dropped some news that changes the IIoT landscape forever. TPG—a private equity firm with $286 billion under management—just scooped up Kepware and ThingWorx from PTC.

If you're thinking "that's just M&A noise," think again. TPG already owns GE's Proficy platform. Now they've got the two of the biggest names in industrial connectivity and IoT. That's not portfolio diversification—that's a play.

But that's not the only thing we're covering this week. We've also got:

- How Slack cut their deployment time from 60 minutes to 25 (and what it means for your MES updates)

- A tutorial on building MCP servers so AI can actually talk to your factory systems

- Google's new log analysis tool that doesn't require a PhD in SQL

Turns out, while everyone else is focused on holiday shopping, the smart money is betting on the tools that connect the factory floor to the rest of the business.

Here's what caught our attention:

TPG Acquires Kepware and ThingWorx—The IIoT Consolidation You Need to Know About

PTC just announced they're selling Kepware and ThingWorx—two names you've definitely heard if you've ever connected a PLC to anything—to TPG, a global private equity firm with $286 billion in assets under management. The deal closes in the first half of 2026, subject to regulatory approval.

The players:

Kepware is the industrial connectivity platform that's everywhere. If you've got data moving between PLCs, SCADA systems, historians, or MES databases, there's a very good chance Kepware is the glue making it happen.

ThingWorx is PTC's comprehensive IoT platform for connecting systems, analyzing data, and managing devices remotely at enterprise scale.

Why the split?

PTC is doubling down on its "Intelligent Product Lifecycle" vision—focusing on CAD, PLM, ALM, SLM, and AI. CEO Neil Barua made it clear: they're sharpening their focus on product design and lifecycle management, not industrial connectivity.

TPG sees it differently. Partner Art Heidrich calls this "a generational opportunity to evolve manufacturing through solutions that bridge the gap between operational and information technology."

Here's what makes this interesting: TPG recently acquired Proficy—GE Vernova's entire manufacturing software business. Now they're adding Kepware and ThingWorx.

They're building a full-stack industrial software portfolio: historians, HMI/SCADA, MES, connectivity, and IoT. That's not portfolio diversification—that's infrastructure consolidation.

Should you be worried?

Here's the thing about private equity acquisitions: the goal is ROI, not innovation. And while TPG promises "business as usual," current Kepware customers should be paying close attention to what happens next.

Questions worth asking:

- Will licensing costs increase after the transition?

- Will support and development slow down during integration?

- How will TPG's Proficy + Kepware + ThingWorx integration affect existing architectures?

Someone's already positioning for this moment:

Litmus Automation's CEO Vatsal Shah posted on LinkedIn that they've "been preparing for this moment" and have developed a dedicated migration program with tooling to make the transition seamless for customers considering a move from Kepware/ThingWorx to Litmus Edge.

Whether that's opportunistic or prescient depends on how this acquisition plays out. But the migration path exists if you're looking for alternatives.

Want Walker's take?

Walker Reynolds is doing a livestream THIS MORNING at 9:00 AM CST to break down what this acquisition really means for the industry, what Kepware customers should be thinking about, and where industrial connectivity is headed. Don't miss it.

Watch Walker's livestream at 9AM CST →

The bottom line:

When private equity drops this kind of capital into OT/IT convergence, it's validation that industrial digital transformation isn't hype—it's infrastructure. But for Kepware's tens of thousands of customers, the next 12-18 months will reveal whether this is a growth story or a consolidation play.

How Slack Cut Build Time from 60 Minutes to 25 (And What It Means for Your Factory Deployments)

Slack's engineering team just published something your DevOps people will love: how they slashed their Quip and Slack Canvas backend build pipeline from 60 minutes down to 25 minutes—without throwing money at servers or hiring a massive team.

The secret? Ruthlessly eliminating dependencies.

What they did:

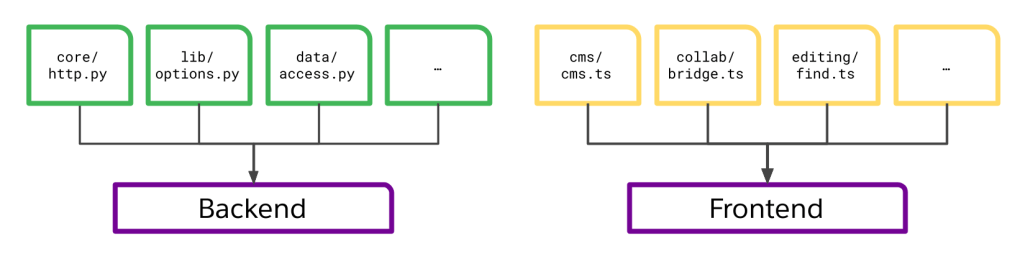

By combining Bazel (an open-source build tool) with classic software engineering principles, they achieved up to 6x faster builds overall. When the frontend was cached, builds dropped to as little as 25 minutes.

The three moves that mattered:

- Severed dependencies between frontend and backend – When your frontend changes force backend rebuilds, you're wasting time. They completely separated these layers.

- Rewrote Python orchestration in Starlark – Moved build logic into Bazel's native language, making the build graph dramatically more efficient.

- Composed granular units of work – Broke monolithic build steps into smaller, cacheable units that only rebuild what actually changed.

The real insight? Their analysis revealed that build code was intertwined with backend code. By enforcing separation of concerns and rewriting the build logic, they dramatically improved cache hit rates.

Why this matters for manufacturing:

You might be thinking, "I don't work at Slack." Fair enough. But if you're running:

- MES databases that need regular updates

- SCADA systems with custom modules

- Ignition projects deployed across multiple sites

- Custom automation tools for the plant floor

...you're probably dealing with the same problem: deployment pipelines that take forever because everything's interconnected.

The real lesson isn't about Bazel specifically—it's about the principle: when deployment takes too long, the problem is usually architectural, not computational.

Dependencies are the enemy of speed.

Practical takeaway:

Next time your team says "we can't deploy that change without taking the whole system offline for an hour," ask: what if we rebuilt this so components were truly independent?

Manufacturing can't afford 60-minute deployment windows during production hours. But 25 minutes? That's a scheduled break. That's a maintenance window. That's actually manageable.

The faster you can deploy changes, the faster you can respond to production needs, fix issues, and roll out improvements. This isn't just about developer convenience—it's about operational agility.

Read the technical breakdown →

Let's Build an MCP Server (Or: How to Actually Connect AI to Your Factory Systems)

Model Context Protocol (MCP) is rapidly becoming the backbone for connecting AI agents to industrial data. And this tutorial shows you exactly how to build one with surprisingly little code.

If you've been wondering how to get AI assistants to actually do something useful with your factory data, this is your answer.

What is MCP?

Think of it as a universal translator between AI systems and your data sources. Instead of building custom integrations for every AI tool and every database, MCP provides a standard protocol.

Build once, connect everywhere.

The tutorial walks through:

- Creating a Python MCP server project

- Installing dependencies (mcp[cli] and httpx)

- Defining tools that retrieve data from APIs

- Testing the server using MCP Inspector

- Connecting to Claude (or other AI assistants)

Here's what makes this powerful for manufacturing:

The tutorial uses a simple API example, but swap that for your:

- Ignition Gateway API

- Historian REST endpoints

- MES database queries

- CMMS ticket systems

- OPC UA servers

...and suddenly you have an AI assistant that can answer questions like:

- "What was our OEE on Line 3 last Tuesday?"

- "Show me all open maintenance tickets for Building 2"

- "Which machines had unplanned downtime this week?"

- "Pull the last 24 hours of temperature data from Reactor 5"

Why it matters:

Every manufacturing operation is drowning in data but starving for insights. Your team shouldn't need to write SQL queries or navigate six different dashboards to answer simple operational questions.

MCP lets you build a natural language interface to your factory systems. Not as a replacement for HMIs or dashboards—but as a way to make operational data accessible to anyone on the team.

The real-world scenario:

Imagine your maintenance supervisor asking Claude: "Which motors are running hot today?"

And getting an instant answer pulled from your vibration monitoring system, thermal imaging data, and work order history—all through a single MCP server you built in an afternoon.

That's not science fiction. That's MCP. And it's surprisingly straightforward to implement.

The bottom line:

As AI becomes more capable, the bottleneck isn't the AI itself—it's getting it connected to your data. MCP solves that problem with a standardized approach that works across platforms.

If you're building the smart factory of the future, you're going to need a way for AI to talk to your systems. MCP is quickly becoming the standard for how that happens.

Read tutorials and documentation →

A Word from This Week's Sponsor

Speaking of the right architecture... (see what we did there?)

If you just read the previous article and thought "yep, that's us—we've got data chaos and systems that don't talk to each other"—meet Fuuz.

Fuuz is the first AI-driven Industrial Intelligence Platform built specifically to solve the data nightmare that's holding manufacturers back. It unifies your siloed systems—MES, WMS, CMMS, whatever alphabet soup you're running—into one connected environment where everything actually talks to each other.

Why it matters:

- Multi-tenant mesh architecture: Deploy on cloud, edge, or hybrid without rebuilding everything

- Low-code/no-code + pro-code: Your business users and developers can both build fast

- Hundreds of pre-built integrations: No more custom integration hell

- Unified data layer: Real-time analytics and AI-powered operations actually work when your data isn't scattered across 47 systems

Whether you're extending existing infrastructure or building new capabilities, Fuuz gives you the foundation to scale industrial applications without the usual pain.

Works for both discrete and process manufacturing. No complete tech stack rebuild required.

Google's New Log Analytics Query Builder—No SQL Required (Finally)

Google Cloud just released a UI-based tool that lets you analyze logs without knowing SQL. And if you've ever tried to troubleshoot a production issue at 2 AM by staring at raw log files, you know this is a big deal.

What it does:

The Log Analytics Query Builder is a visual interface that generates SQL queries for you. You pick what you want to see, it builds the query behind the scenes, and you get:

- Real-time SQL previews

- Intuitive JSON handling

- Faster troubleshooting

- Visualizations without writing code

Why this matters for manufacturing:

Your SCADA system logs everything. Your PLCs log everything. Your MES logs everything. Your edge devices log everything.

But when something goes wrong at 2 AM and production stops, the person troubleshooting isn't a database administrator—it's a shift supervisor or maintenance tech who needs answers now, not after they take a SQL course.

The problem with traditional log tools:

- Too much data, not enough context

- Tools designed for software developers, not plant floor teams

- Critical information buried in JSON blobs and timestamps

- No easy way to correlate events across systems

What this changes:

Instead of calling IT at 2 AM to write a query, your team can:

- Visually filter logs by machine, timestamp, error code, or severity

- Drill into JSON payloads without parsing syntax

- Correlate events across systems (PLC logs + network logs + application logs)

- Export results that actually make sense

Real-world scenario:

Line 2 goes down. The HMI says "Communication Fault." Is it the PLC? The network? A bad cable? A software bug?

With the query builder, your team pulls up logs from the last 30 minutes, filters to Line 2 devices, and immediately sees: the Ethernet switch logged packet loss at the exact moment the fault occurred.

Not a PLC issue. Not a programming issue. A network cable that needs replacing.

Time to resolution: 15 minutes instead of 2 hours.

The bottom line:

Log analysis shouldn't require a computer science degree. When Google—a company that practically invented big data—decides to make log analysis point-and-click, they're acknowledging what manufacturing has known forever:

The best tools are the ones anyone on your team can use when they need them most.

Read about the query builder →

Learning Lens

Advanced MCP + Agent to Agent: The Workshop You've Been Asking For

If you've been building with MCP and wondering how to take it to the next level—multi-server architectures, agent orchestration, and distributed intelligence—this one's for you.

On December 16-17, Walker Reynolds is running a live, two-day workshop that goes deep on Advanced MCP and Agent2Agent (A2A) protocols. This isn't theory—it's hands-on implementation of the patterns that enable collaborative AI systems in manufacturing.

Here's what you'll build:

- Multi-server MCP architectures with server registration, authentication, and message routing

- Agent2Agent communication protocols where specialized AI agents collaborate to solve complex industrial problems

- Production-ready patterns for orchestrating distributed intelligence across factory systems

The Format:

- Day 1: Advanced MCP multi-server architectures (December 16, 9am-1pm CDT)

- Day 2: Agent2Agent collaborative intelligence (December 17, 9am-1pm CDT)

- Live follow-along coding + full recording access for all registrants

Early Bird Pricing: $375 through November 14 (regular $750)

Whether you're architecting UNS environments, building agentic AI systems, or just tired of single-server MCP limitations, this workshop gives you the architecture patterns and implementation playbook to scale.

Why it matters: MCP is rapidly becoming the backbone for connecting AI agents to industrial data. Understanding how to orchestrate multiple servers and enable agent-to-agent collaboration isn't just a nice-to-have—it's the foundation for autonomous factory operations.

Byte-Sized Brilliance

The average manufacturing facility generates about 2,200 terabytes of data per year. That's more data than Netflix streams globally in a single day.

But here's the kicker: according to McKinsey, less than 1% of that data is actually analyzed and used for decision-making.

Think about that for a second. Your machines are screaming valuable insights at you 24/7—vibration data, temperature trends, cycle times, quality metrics, energy consumption—and 99% of it just... sits there. Collecting digital dust in a historian database nobody ever queries.

Meanwhile, a single unplanned downtime event costs manufacturers an average of $260,000 per hour. And in most cases, the data that could have predicted that failure was logged three days earlier. It was just buried in 2.2 petabytes of noise.

This is why tools like Google's Log Analytics Query Builder, MCP servers, and modern connectivity platforms aren't nice-to-haves anymore—they're survival tools. The factories winning in 2025 aren't necessarily the ones with the most data. They're the ones who can actually find the signal in the noise when it matters most.

Your great-great-grandparents worried about whether they had enough data. You're worried about drowning in it. Progress is weird like that.

|

|

|

|

|

|

Responses